Let’s talk about the edge cloud architecture

How you should plan? where to place the controller? and where to place the rest of the functions?

These questions are the first ones that pop up when a Telco wants to design its edge network

After all, the reason a telco decides for edge computing in addition to cloud computing is to run real-time functions closer to the user, so it is important to focus on the location of functions placement in the edge cloud architecture.

It boils down to where the control functions are placed in edge computing architectures.

In addition, the edge architecture should be flexible enough.

In other words, the architecture should not tie to only ONE specific environment such as containers or VMs or even bare-metal.

Rather, it should be flexible enough to run any environment. Also, the architecture should equally apply if an operator wants to use a public cloud or a private cloud.

With these factors in mind, there are two popular architecture models which I will explain, but to understand them, it is important to understand the difference between the central data centers and the edge data centers, first.

Central Data Centers vs Edge Data Centers

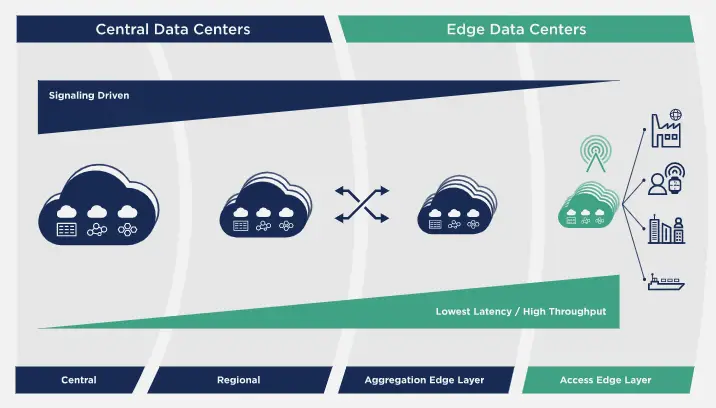

Central data centers ( central cloud) are the usual ones we know of, that are more centralized and far from users, while the Edge Data Centers are located closer to the edge as shown in the following diagram from Openstack

Centralized cloud runs control plane or signaling plane-related functions. Examples from core networks include the signaling plane of IMS or the control plane of EPC.

While the distributed Edge Data Centers run mainly “user” functions. These are the functions that are throughput intensive and are latency-sensitive ( also called real-time) so they should be run as closer to the user as possible. One example of a user function is “UPF” in 5G. In terms of the actual applications that are suitable to be run on edge network are video surveillance, CDNs, AR/VR, etc.

Also in the C-RAN approach, CUs and DUs can be run closer to the tower at the edge data centers.

So in summary, the general principle of deciding what to run in the central layer vs edge layer is following

- If the workload is control plane/signaling intensive, it is run at the central location

- If the workload is throughput intensive and/or latency-sensitive, it is run from the edge location.

Two Models of Edge cloud architecture

While there may be a lot of edge computing architecture models, the following two are the popular ones.

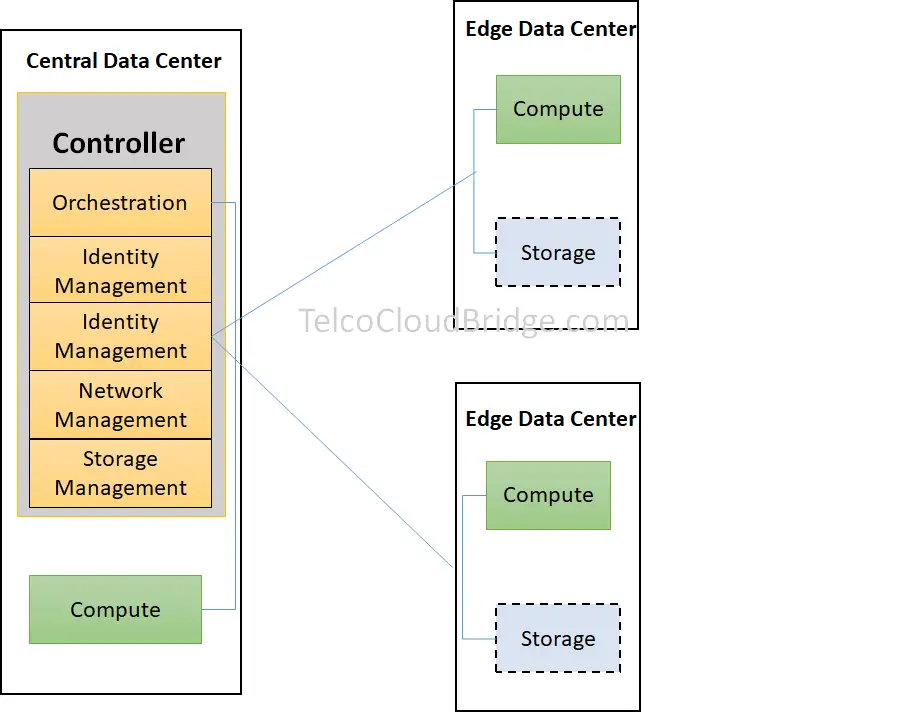

Centralized Control Plane

For the centralized control plane model, the controller is placed in the central data center while the edge data centers carry the compute nodes only, sometimes also called the edge server.

The controller includes the following functions

- orchestration

- authentication

- storage management

- image management

Centralized Control Plan-Edge Cloud Architecture” class=”wp-image-2933″ width=”545″ height=”430″/>

Centralized Control Plan-Edge Cloud Architecture” class=”wp-image-2933″ width=”545″ height=”430″/>The management and orchestration are done centrally, so the advantage is the “ease of control” from a central location. On the downside though, the edge data center can get isolated if there is a loss of communication between the two data centers resulting in the edge data center running without any control.

Secondly for such a model to work effectively, there needs to be a good connectivity layer between the edge data centers and centralized data centers as there is a lot of dependency on the effectiveness of the connectivity

Therefore this takes us to another design of edge cloud architecture

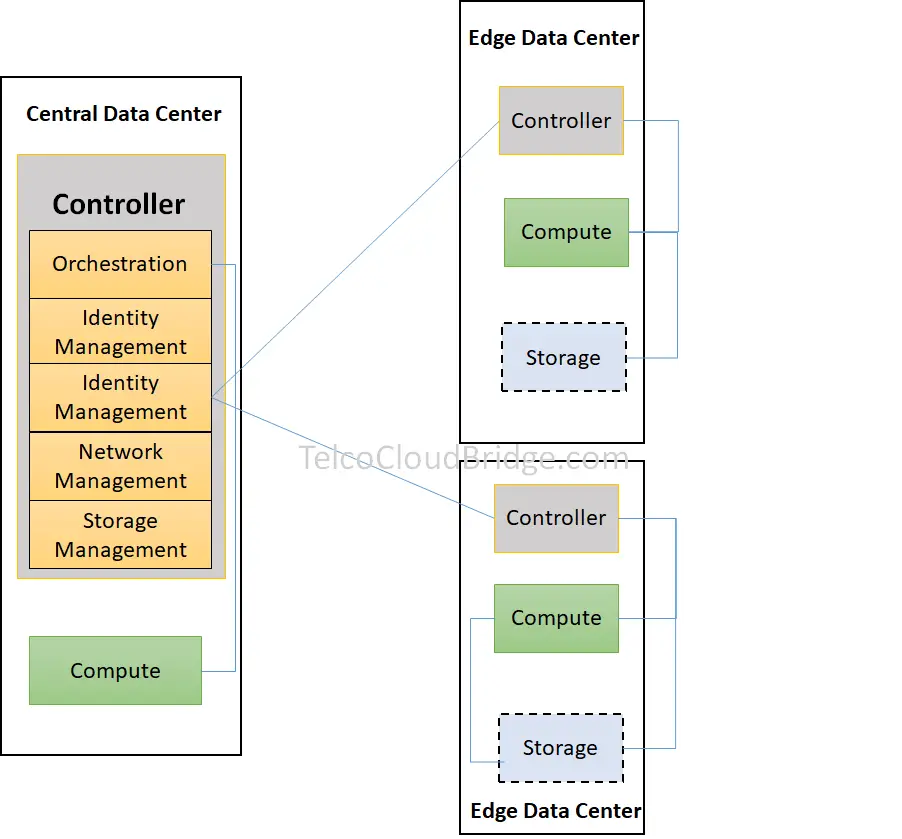

Distributed Control Plane

In distributed control plane architecture, the controller instance runs on every edge data center thus making it autonomous. In other words, the edge server runs both the control functions as well as the compute functions.

There are a couple of ways to operate such a model. One way is to create a federation of edge data centers and connect their databases to operate infrastructure end to end as a whole, another way is to synchronize the databases across sites to have consistent configuration across databases in the edge data centers

This model provides more resilience as having a loss of communication between the central data center and edge data center would have less impact. As the configurations required to run the edge data center are managed locally.

Note that these architectures would apply also to the public clouds. For example, a telco may decide to use a public cloud at its edge node instead of its own cloud. In that case, both the above design options are also applicable.

In summary, designing a network edge is not a random precise but deliberate attention should be paid to the choice of architectures available.

Some choices that can affect your decision are following

1. The choice of deployment depends upon your requirements for scalability, resiliency, cost-effectiveness etc.

2. Do you have sufficient space in edge data centers to accommodate control functions placement?

3. What level of redundancy do you need for network edge?

4. What are the geographical distances? if the distances are small, the centralized model is easy to run and manage.

References