Think about NFV! Think about x86 processor ….Both of them are inseparable. Isn’t it?

No matter how simple a processor (compute part) sounds, I bet a lot of people don’t know that the compute domain in NFV is not just the compute processor of the node…Actually it is much more.

The fact is that the “Compute Domain” and the “Compute Node” in NFV does not mean one and the same thing by ETSI definitions. Know it and you will avoid a lot of confusion understanding the NFV basic architecture and avoid misunderstanding while communicating with a vendor/customer on this subject

Of course, you don’t want to go wrong with the basic building foundations of NFV, isn’t it?

And not only that, if you stay until the end, all the essential terminology of servers that you hear every day like COTs, NIC, hardware accelerators would be clarified.

So first of all what is “Compute Domain” versus “Compute Node” in ETSI’s terms?

(To get more details about NFV architecture, I recommend reading my blog on cheat sheet on NFV architecture or NFV MANO)

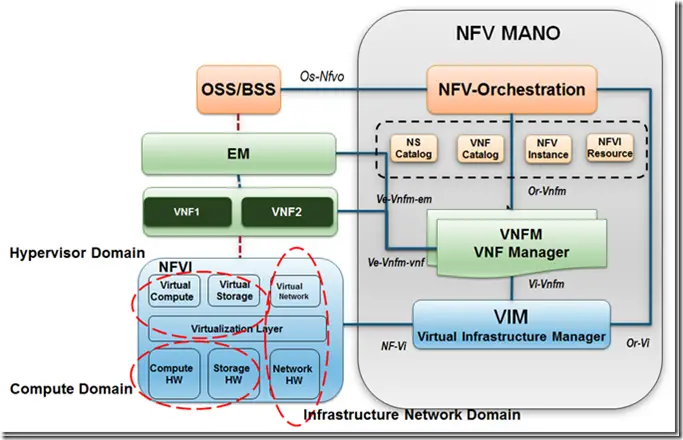

As shown clearly in the NFV Infrastructure block above, the Compute Domain includes both the compute hardware and the storage hardware. So Compute Domain is the superset, of which the Compute HW/node is just one part.

Surprised, aren’t you? How many of you were thinking of storage as a part of the compute domain?

So let’s go deeper into what is included in the compute domain:

Thee three Parts of Compute Domain

1. Compute Node

In COTS (Common of the shelf) architecture, the compute node includes the multi core processor and chipset which may include the following physical resources

· CPU and chipset (for example x86, ARM etc.)

· Memory subset.

· Optional hardware accelerators ( e.g. co-processor)

· NICs ( Network Interface cards with optional accelerators)

· Storage internal to blade (nonvolatile memory, local disk storage)

· BIOS/Boot loader ( part of execution environment)

A COTS server blade is an example of compute node

2. Network Interface Card (NIC) and I/O Accelerators

As you can see that I mentioned NIC as part of the compute node in earlier section. Which is true. Because, in the case above the NIC capability is on board the server. However there is a recent trend of a disaggregated model (For example Open Compute Project OCP). In this form-factor, the CPU blades/chassis are separated from the NIC/Accelerator chassis, and Storage chassis. Inter-connection between the blades/chassis could be over optical fiber. So because of this reason NIC is mentioned separately as it owes its own explanation owing to its importance.

The main function of NIC is to provide network I/O functionality to/from CPU.

The vision of NFV is to run network functions on standard generic x86 servers. In practice however there are many I/O intensive applications ( for example virtual router) that would demand acceleration technologies to help improve I/O throughput of the machines.

Some hardware acceleration techniques involve the following:

· Hardware acceleration such as Digital Signal Processing (DSP), packet header processing, packet buffering and scheduling.

· Cache Management features.

Additions to the Instruction Set Architecture (ISA) (e.g. x86, ARMv8, etc.) which implement new software acceleration features.

3. Storage Building Block

The storage infrastructure includes different primary classifications of drives: Hard Disk Drives (HDDs), Solid State Disks (SDDs), Cache storage etc. which needs to be understood.

3.1 Hard Disk Drives

A hard disk drive (HDD), hard disk, is a data storage device that uses magnetic storage to store and retrieve digital information using one or more rigid rapidly rotating disks (platters) coated with magnetic material. The platters are paired with magnetic heads which read and write data on platter surface. Data is accessed in a random-access manner, which has the advantage that data can be retrieved in any order and not only sequentially. HDDs is non-volatile storage. Which means, it can retaining stored data even when powered off.

3.2 Solid State Disks (SSD)

Solid State Disks (SSDs), unlike HDDs, do not possess moving parts. Therefore, compared with HDDs, SSDs are typically more resistant to physical shock, run silently, and have lower access time and lower latency. So, they are a suitable technology for use in applications that demand considerable randomized accesses. They are comparatively expensive to HDD.

3.3 Hybrid Disk Drive

Increasing becoming popular, Hybrid hard drives offers HDD capacity with SSD speeds by placing traditional rotating platters and a small amount of high-speed flash memory on a single drive. So the beauty of Hybrid Disk drive is that they will utilize SSDs as a high-speed cache (or possibly as a tier) and will utilize HDDs for persistent storage.

So understanding the three parts: “Compute Node”, “NIC” and “Storage” completes the picture of Compute Domain and understanding these basic parts is really important as they form the server on which the complete NFV architecture is erected.

Drop a line to me if you found this basic understanding of the server useful to you?

Hi Faisal,

thanks for sharing your thoughts, as usual valuable. About the topic, the computing domain, I’d like to add some points to the discussion (note those are my own points of view, never reporting concepts from any company or other persons):

– I would spend more words about FNV and NFVI: though you have to define computing domain resources into the NFV descriptor files, logically speaking they are not supposed to be strictly connected. Maybe the NFV “hardware independent” characterization needs to be remarked at the very beginning of the thread.

– In my own point of view, what it is still not well defined is the elastic assignment of the computing domain (through the MANO, of course). In a future scenario where we will have a distributed computing capabilities spread over the network, from classic data center down to the edge of the network, close to the End User, it a frustration to don’t have a chance to play with different scheduling policies, lower definition of computing slice for a better resource sharing. This is an area that needs higher focus.

– Still talking about elastic deployment of services in a wide characteristics range (from pseudo-Real Time to best effort), the utilization of COTS should be preferred, but with no limitation. A full set of Hardware Assisted Virtualization (HAT) features as well as Hardware Accelerators (HA, like encryption/decryption, cryptography, data compression and, as you mentioned in chapter 2, traffic protocols engines) sometime is the only suitable way to deploy well-preforming end-to-end service chain. My feeling is that community is convinced and convinced of the need of HA while moving to the edge of the network. However, I would remark the need to count on “sliced” structure for them, or the isolation between different services cannot be guaranteed. Security is a very important issue, to be considered right now.

Best Regards,

Carlo

Hi Carlo,

Thanks for your thought provoking comments. Some points from my side to add to your points.

1. NFV is hardware independent means it is independent of a particular brand or propriety hardware. At the end it is still dependent on the machine on which it runs which in this case is COTS hardware. And as we are talking about carrier class network functions, we have to specify the computing resources to run particular functions. For example how much CPU , memory or storage is needed to guarantee particular SLA of a network function.

2. I agree with you. Elasticity needs to be well defined in NFV. it needs in particular strict control,and defined conditions which would call for scaling in and out ( also up and down ) of the resources. In particular if you need distributed elastic resources, you would have to depend on SDN to help achieve this objective

3. I agree with you that hardware acceleration would be needed to help achieve critical functions, in particular those that depend on sufficient throughput and latency.

Regards,

Faisal

If a tier-1 service provider decided to deploy NFV for the first time and run it on his current HW appliances,shall he must check the possibility of his current HW appliances to accept changing it’s configuration by NFV MANO or he must replace his current HW appliances with others compatible with NFV concepts from NFV appliances vendor.

waiting your kind response.

Regards.

Thanks Nabil !

It depends on what the operator wants to run in NFV. If it is control plane intensive VNF , then he can use the current x86 servers. If he needs to run throughput intensive applications like switching or routeing, he should have some sort of hardware or software acceleration available on the servers.

Faisal

Hello Faisal,

Thanks for sharing your thoughts.

Is there a clear way to explain the difference between NFV and generic software virtualization? NFVs require an orchestrator to connect a bunch of them to create a network service. In this blog you raised the special requirement for NFVs which is the need for acceleration technologies to help improve I/O throughput. Other than the connectivity to create network services and improving I/O throughput, is there any other requirements that dictate a different way to create and manage NFVs than we do with plain old software virtualization?

Thanks,

Adel

Adil, the generic server virtualization does not involve strict networking requirements, while some VNFs are data throughput intensive applications. For example virtual router or switch. NFV is more carrier class in terms of reliability, manageability and security. You may say that Openstack would be enough to run in clouds/server virtualization but it is not enough to run in NFV owing to stringint requirements as mentioned above.

Great post

Faisal Khan, thank you so much for sharing your mind map and your approach to easy conceptualization!

Thank you Maru

Nice and Great

Thanks Shahid for visiting and commenting