Let’s face it, we all love to have everything cloud native based including cloud Native VNFs. In practice, however, we need to live with the legacy VNFs.

The legacy VNFs are monolithic with bulky code and operated in some cases as all in one virtual machine. They can be called virtual, but not cloud-based or “cloud-native” to be more specific.

If you want a quick overview on what is cloud native, you can see my other blog.

It is important for you to understand a cloud native VNF as the5G Core is cloud native based.

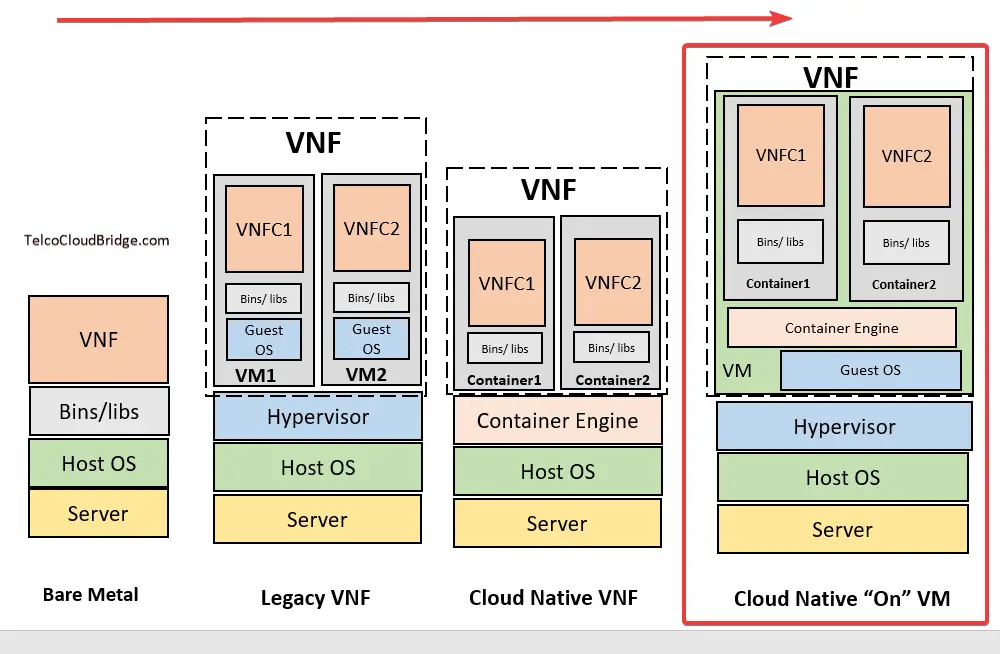

To understand and appreciate a cloud native VNF, I take you through a journey starting with the bare metal, then a legacy VNF, and finally a cloud native VNF.

Before I take you through that journey, I would like to point out one simple thing that can help you understand how VNFs normally work. While a VNF can be one VNF to one VM. That is VNF is one component that completely occupies the virtual machine.

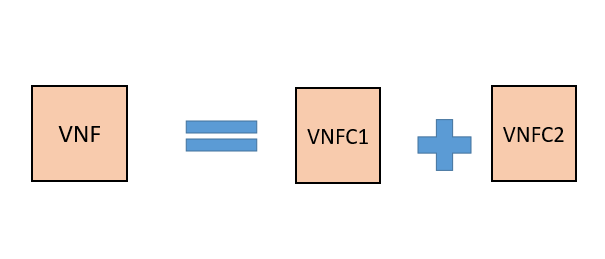

In practice, however, VNF consists of multiple components also called microservices or VNFCs (VNF Component) as ETSI called them. As VNFs can be quite complex such as an EPC in the mobile network, no vendor will make an “All in one” VNF that occupies a single Virtual machine. It will be many small VNFCs that are connected and together give a VNF functionality.

So lets say our VNF here consists of two VNFCs like following which we will be using in our example.

Having understood this components of one VNF, let’s now begin our journey.

Bare Metal

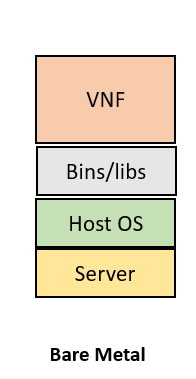

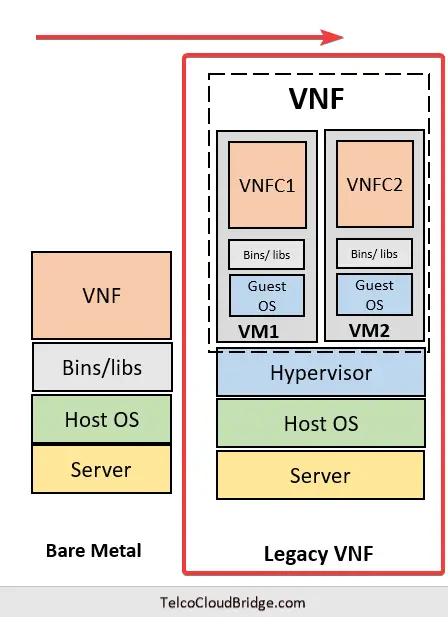

In bare metal, we run the application/VNF directly on the server. This is the legacy way to run applications. Take a server and install the application. There is no way to slice/partition the server to run more applications/VNFs. However the advantage is that the single application get access to the complete compute, storage and memory resource of the machine.

So in our case, it is not possible to run our example VNF that has two components as above. What is possible is to run any single component VNF directly on the operating system ( such as Linux) of the bare metal as shown below.

Legacy VNF

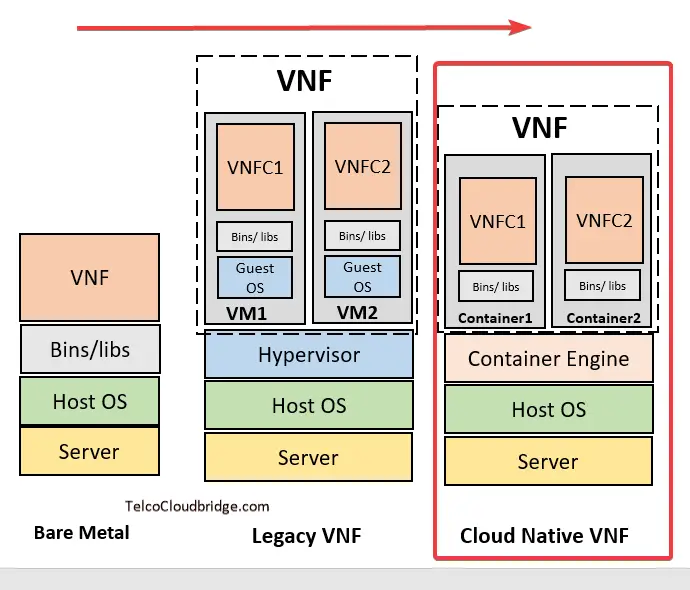

As a legacy VNF runs on the virtual machine, you would need two virtual machines to run this VNF. Additionally, the virtual machine would need a hypervisor to slice the server into multiple logical servers. With two virtual machines available, it is possible to keep each VNFC in a different virtual machine.

Do take a note, however, that a Virtual machine would need to have a separate Operating system ( OS) called guest OS on top of the host OS. This is an extra burden on a server as you would understand shortly by comparing it with containers

Cloud Native VNF

Cloud Native VNFs make use of containers. Thanks to the lightweight size of the containers that do not need a separate guest OS, it is possible to run each VNFC as a different container directly on the host OS. You do not need a hypervisor, but you will need a container engine to enable spinning up the containers.

As you probably already have guessed that a Cloud Native is a preferred approach to building up VNF applications today as they are lightweight because they host on the same Linux kernal of the host machine without the burden of the need for additional guest operating system.

But here is a challenge. How do keep different cloud Native VNFs separate from each other? Would you really run containers of one vendor in the same containerized environment as another vendor?

Thats take us to the more practical approach to running cloud Native VNFs today.

Cloud Native VNFs “On” VM

The most practical way followed today is to keep different cloud native VNFs on different VMs. That way you can enjoy the flexibility of running a lot of these containers within one VNF and at the same time isolation between different VNFs ( perhaps of different vendors).

So a setup like the following will allow you to add a second VNF easily in a second VM on the same server.

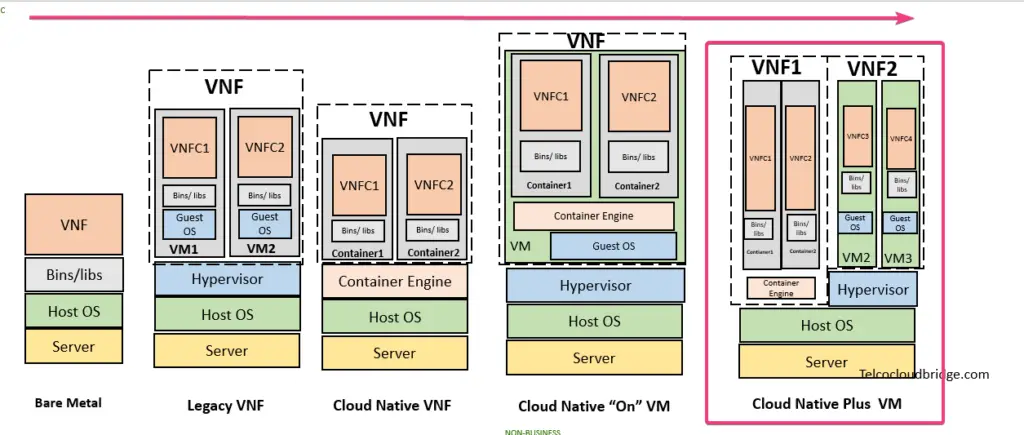

Cloud Native VNFs Plus VMs

We run in a practical world.

There will be legacy VNFs along with cloud Native VNFs for quite some time as we have already deployed a lot of legacy VNFs in the network and before they phase out to a more modern VNF based on cloud native application. A variation of this is to have Cloud Native VNFs run directly on the server in parallel to legacy VNFs in VMs. In this diagram you say a legacy VNF2 running with a cloud native VNF1 on the same server.

What is the future ?

Future is all about cloud Native VNFs. There will be a time when legacy VNFs will be phased out. Then there will be a stage with containers are developed and mature enough to provide enough isolation to start running Cloud native VNFs of different vendors on the same Linux OS. That will be the time when perhaps there will be no need to have a virtual machine and a world that can live without virtual machines forever. When this happens. We are not sure at the moment.

Do you agree ?

Hi Faisal,

You are boon to the telecom world. Excellent article. Thank you very much. No one can explain complex topics as clear as you. Much appreciated. Please keep sharing.

Regards,

Satish

you made my day @Satish Pakki. thanks

Thanks Faisal.

Your blogs are thought provoking.

Do you think architecture wise anything changes when Telco core functions moves to Public cloud?

Thanks Sanjay, I do not think so that complete Telco core functions are initially moving to public cloud…telco core is very critical function. What might happen initially is that the OSS/BSS or some telco function which are mainly control-oriented ( instead of throughput oriented) move to public cloud.

Great one Faisal. I was quite curious in getting to know the pulse of the industry, especially Telco, on their choice of platform for NFVI : Bare Metal On-Premise, Virtual Machine On-Premise, Bare Metal Cloud, Virtual Machine Cloud or any other. In fact, I’m running a LinkedIn poll for the same for the next 2 weeks. https://www.linkedin.com/feed/update/urn:li:activity:6755384445202771968/

Always good to have your views Anuradha, I added my view to the poll

Great Blog one question installing containers in guest os it will still add the overhead which two os brings in,the whole idea of light weight containers goes off.. we can use zun api’s in openstack and directly create different containers.Only aspect that we can think of having container inside guest os is security aspect

Great one Faisal !! Just adding few cents..

About the Future:

Right now problem is VNFC are still heavier components so de-composing them into independent microsevices and orchstrate them using “Micro Service Orchastrator” .. this will help in managing 5G++ in much better way (servicemesh, istio, …) … This may take a while for VNF vendors but movement started so in 3-5 years time we will have this as reality of market…

Point to Ponder :

Right now power consumption of VNF is another problem as even if we run single VNF on single OS, more than 50% of unwanted libraries also gets executed, resulting into higher power consumption and larger foot print. Utilizing “Unikernel (http://unikernel.org/resources/)” for VNF/CNF can further optimize through put and power consumption.. this is still a research topic but can make bigger impact for telcos.. Lets see how it flies 🙂

Great comment Deepak ! Thanks for sharing your thoughts. Good to know about the power consumption of the VNF that I was not aware of.

Very nice explanation

Thanks a lot

Beautifully explained..????

Thanks a lot Ananth

Hi Faisal,

After going through so many video on YouTube and articles elsewhere , finally I have found your blogs to get complete understanding about technology changes around Telco Network. Now no need to go anywhere else 🙂

My Company is running VNFs on cloud native K8s clusters.

This is possible Shwetanshu